This tech note explores the interplay between network and computing speed, applications, public money, vendors and the compulsion to stay current with technology in schools. In some refresh cycles, the applications are ahead of the network. In others, the networks are faster and more capable than needed for the available applications. At present, network technologies are faster than our needs.

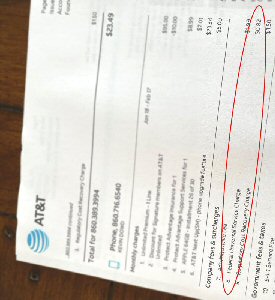

Technology is subsidized and the subsidies drive the technology. In 1934 the Federal Communications Commission (FCC) was formed around the notion of Universal Service. The problem they were trying to solve was that telephony wasn't reaching rural communities because telephone companies had no incentive to invest and maintain long-haul lines with so few subscribers.

The FCC addressed the deficit by subsidizing telephony through fees assessed to carriers. These fees were passed along on users' communications bills. Telephone users in urban areas essentially paid for telephony in rural areas. The notion of Universal Service has a changed, but it still exists and this fee still appears on your phone bill today.

In 1996, the Universal Service Administration Company (USAC) was created and the Universal Service charter was expanded to provide telephony to schools and libraries, which eventually expanded to broadband data access, too. The modernization of E-Rate in 2015 added equipment to the eligible product list (category 2 funding).

There are currently four Universal Service initiatives:

- Connect America

- Lifeline, which extends communications into tribal lands

- Rural Health Care, which helps pay for communications to healthcare providers in remote areas

- E-Rate

E-Rate is administered by USAC as part of the reach for Universal Service. The current E-Rate cycle is a five-year allotment (2021-2025) of $167/student, with a funding floor of $25,000 for smaller schools.

The USAC E-Rate "contribution rate" is derived as the percentage of students receiving federally subsidized lunches (NSLP) within a range of 20 to 90%. One school might received 80% funding for their category 2 equipment and services whereas another school might get 30%, for example. Nationally, E-Rate is capped at $4.456 billion, annually.

At the height of the pandemic, the possibility that education had changed forever put pressure on IT initiatives and spending. Many districts already had devices, such as Chromebooks, for students. But, the pandemic forced remote learning several days a week. Not all households had or have a broadband connection.

E-Rate came under pressure to grow it's focus to include broadband access for students. The American Rescue Plan, March 2021, originally included $7.1B in emergency E-Rate funding for home Internet. This was revised downward to $3.2B. The funds were to come from the treasury instead of the USF (phone tariff). USAC already had the processes in place, so it was a natural vehicle for implementing the program.

Build Back Better, signed by president Biden in November 2021, included an additional $475M for laptops and tablets, $300M for an Emergency Connectivity Fund, $280M to fund pilot projects to boost broadband access in urban areas, plus a few other expenditures. This money was on top of money that hadn't been spent from the previous program. The new program was called the Emergency Broadband Benefit Program (EBB). This was replaced by the Affordable Connectivity Program (ACP) in December of 2021. It provides funds for broadband, computers and tablets to households 200% below Federal Poverty Guidelines. It is a long-term $14B program. The money is expected to run out by mid-2024.

There is money sloshing around. The effects of being out of the classroom for almost two years are still making themselves known. Where does this money go?

Schools deserve the help. Scholastic computing--schools are the most demanding environments of all (except maybe with the exception of warehouses). Schools have a dense wireless population that gets up and moves every 45 minutes. They're being hacked from within. A district regularly hits bit rates of 500 Mbit/S, provided they have the necessary Internet connection. The wireless and wired networking technology available today is more than schools currently need. But, schools are acquiring it anyway.

- Equipment inadequacy

- More demanding applications

- Vendor end-of-support

- Technology improvements

- Available money

- Competition

Competition

For universities and private schools, good networking has been a competitive issue.

Taking wireless specifically, in the late-2000s, wireless was a competitive issue for schools. Public schools weren't affected by it so much, except for a desire to serve their communities. However, our private school and university customers saw good Internet and good wireless as lifeblood issues.

Competing for students in both day-school and boarding schools required good connectivity to match the expectations of the parents paying the bills; they wanted to be able to Facetime their children. The kids cared even more; they wanted good Internet and if the school didn't have it, it was a bad school (in their minds). We had customers located in Western Connecticut and mid-state New York for whom good Internet and WiFi was student retention must-have.

End-of-Support

Vendors can resell the same basic functionality, perhaps with an uplift, by ending support for older technology.

While technology is obsolescing your gear, manufacturers are forcing turnover in other ways, too: they retire devices with end-of-sale and end-of-support. They also replace whole product families. We’ve sold some customers three different lines of switches from the same manufacturer in the last ten years! The reason? The manufacturer abandoned existing lines, even though the products were great and would have had additional service life.

Obsolescence

New applications can make older technologies obsolete at the same time the newer technologies make new applications possible and make older technologies obsolete...

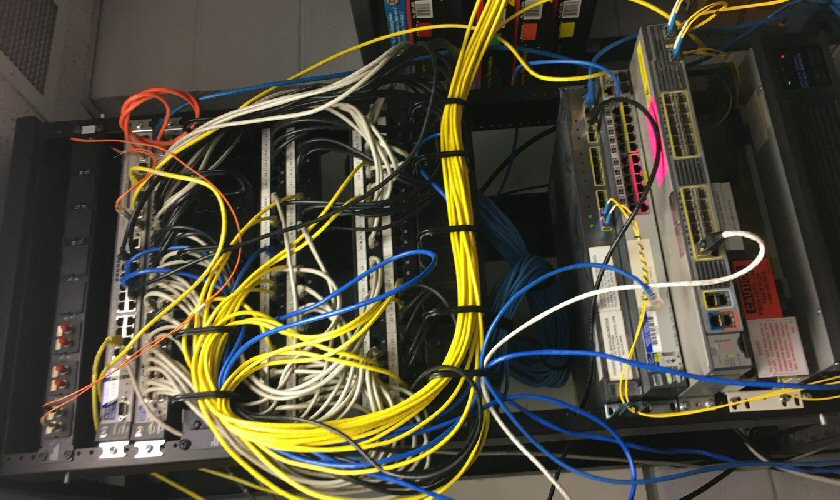

I laugh to myself when I go into a school customer's MDF with a new switch, firewall or wireless controller and see the last two generations of the same kinds of devices in a pile, in a corner. We have customers who've left old generations of access points in the ceiling. While Moore's law has hit the wall for computing power, networking has kept improving. Even today, your school is likely to be a generation behind in terrestrial and wireless networking technology.

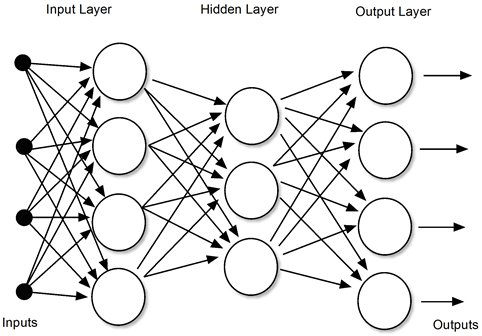

There's a feedback loop between applications and technologies that fuel both. For instance, a laptop from ten years ago doesn't have the horsepower to run all the Javascript advertisements forked over by any site you might visit. A room full of people Zooming requires a faultless wireless network and Internet connection. To keep up to the applications and for applications to exploit the network has required refresh--over and over!

In fifteen years, wired networks have gone from 100 Mbit/S to Gigabit to 10 Gigabit on pedestrian network equipment. Modern wireless--an engineering marvel--has squeezed amazing performance and density out of the spectrum and the classroom space. But, user devices haven't even caught up with the last big innovation, and the next one is already for sale.

Networks are getting ahead of applications. It’s time for new applications!

Demanding Applications

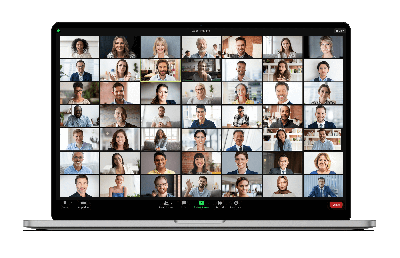

I had a friend and boss at United Technologies Research Center. He was a PhD mathematician working in a group that created fluid dynamics models for airfoils. His name was Bill Smith (not his real name; changed for this story). Bill was a good speaker and interested in all things computers. In the early 1990s, he became enthralled with video conferencing. The video compression algorithm in use at the time, pre-MPEG-1, was not all that efficient. This forced a trade-off between link cost and video quality. If you wanted a decent picture, you needed fast connections. Moreover, there weren't Internet aggregation sites. Most sites didn't have Internet connectivity to meet the requirements, anyway. Data had to be brought into a private hub site. At that time, ISDN infrastructure with bandwidths of T1 speeds--1.5 Mbit/S or better--was required. An dialup ISDN circuit cost over $1000/month—one for each end-point.

Video conferencing equipment was even more expensive on scale. A typical outfitted conference room required ten thousand dollars in cameras, encoders and decoders for a medium-quality video conference experience. The aggregation site could cost many tens of thousands. Under Bill's urging, United Technologies made an investment in video conferencing. This included installations in Pratt & Whitney, Sikorsky Aircraft, Carrier, Norden Technologies, UT automotive and the Research Center. Ironically, a number of these divisions was in the business of moving people from place to place; video conferencing was counter to the mission because of its potential to cut into business travel.

The installations worked, but they weren't used often. People had to travel to the local video conference room. Even if that was just across the building, a phone call could be just as good in many cases. Eventually, Bill got fired over the time and expense--and for making his bosses look foolish for supporting a very visible waste of money and effort.

Ready Money

Schools will acquire technologies that they would otherwise have to go without.

E-Rate drives acquisitions. It's use is entirely legitimate and even laudable. However, ready money causes market distortions. I think, for instance, about the case of the city of New London, near me on the southeast coast of Connecticut. New London has a 90% USAC contribution rate, which means that if they want to buy something that costs a dollar, they need to come up with 10 cents. In 2012, years before I'd even seen a 10 Gbit/S network, New London had one running throughout the city with the help of E-Rate funds, a sharp salesman and 10 cents from the taxpayers.

Public schools started building out their networks before E-Rate funds were available for Internet access. E-Rate money was available for telephony in the beginning, followed by some network access. In 2015, C2 E-Rate money could be applied to network infrastructure. We saw it as an opportunity. Manufacturers saw it as an opportunity. The race was on! Internet content of every sort was being developed anyway, but the market for educational products, video-on-demand and distance learning soared.

What the pandemic showed us:

It took 20 to 40 years to prove video conferencing was useful.

Bill's problem was that he was ahead of his time, and ahead of the technology as the COVID-19 boom in video conferencing demonstrated. The co-dependent feedback loops of technology enabling applications and applications requiring technology were very apparent during the pandemic. We had been building the network to support world-wide video conferencing all along. We just weren't aware of it until 2020.

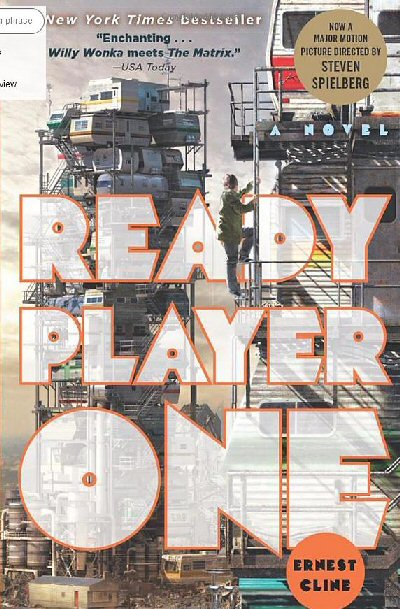

Where is this all going? Maybe here?

I believe that, just as Bill Smith was ahead of his time with video conferencing, Meta and others are ahead of their time with VR. The infrastructure for the future of remote learning is being built now. 4K stereo 120 frame/sec graphics will burn up some of this bandwidth.

There is content for sale now for VR for the classroom. But, VR is not the classroom itself. Meta has created gathering spaces. There are some startups. It may seem impossible to us that someone could spend a whole day in virtual or augmented reality. I get sick playing Mario Kart! But kids will be able to do it. Meta is betting on it. According to recent data sent out in an email from Plume, the number of home VR headsets didn’t increase over 2022, but the data usage soared by 84%.

Summary

Looking at Connecticut, in the early 2000s, then-Governor Jodi Rell's pet project was the establishment of the Connecticut Education Network, or CEN. Public funds were spent on gigabit fiber rings (at $14K/linear mile) and multi-gigabit interconnectivity at the core of the network. Each of Connecticut's 169 towns received a 1-Gbit/S hand-off from the network. This was unheard-of Internet speed at the time.

I was working for CEN in 2004 when the first iteration of the network was essentially complete. It was a state job, and not well-defined. So, I took upon myself to reach out to school districts that hadn't yet connected. "But, we already have an Internet connection," they would say. Typically the existing connection was a T1 (1.5 Mbit/S) or so. The demands were modest. There was no wireless in schools in 2004 and little specific online content. I would explain: "but this is really fast." And it didn't cost anything at the time.

People didn't know why they needed it. Fast networking needed to be needed. And to be needed required applications that consumed bandwidth. And there really weren't any. But, there was money being thrown at the Internet and that money created markets that would have developed more slowly on their own.

Over the brief lifetime of the Internet in schools, the internal network, external bandwidth, available applications and subsidies have spurred each other on to increase the network technology in schools. We have customers with 20s of Gbit/S feeding network closets. There are no applications for it yet, but they will come.

-Kevin Dowd