Network Infrastructure is Changing

Last year's switches are very appliance-like. They're a narrow mixture of layer-2, layer-3 and Power-over-Ethernet features--just as they have been for about fifteen years. Switches you might buy this year will behave like last year's when you need them to; you can still program them individually at layer-2 or layer-3 using the command line. But, they're different.

As with phones, cars, televisions, and other electronics, network switches (and access points) have benefited from great increases in computing power. New devices run general purpose operating systems in lieu of embedded firmware. They're built upon supercomputer-speed hardware. So, in addition to switching and routing, they can do general-purpose computer-like stuff, and they can be extended in their capabilities.

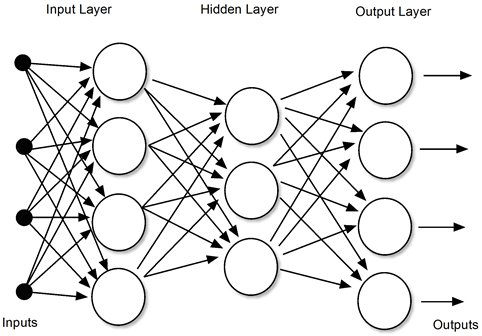

Like what? The best-hyped new capability in network equipment is AI. This is the ability to leverage observations taken in the past and apply them to your network in the present. Moreover, it is the ability to pool and organize observations across many organizations' networks to increase the network's resilience for everyone. This includes giving the network the power to recognize issues and heal itself.

Switches are smarter in non-AI ways, too. We've had NAC for a long time (and barely used it). Now, you can program the switches to recognize and classify a device, such as a printer or AP, and assign it the appropriate VLANs and permissions--all by itself. Switches can often apply ACLs and bandwidth restrictions without the help of a central authentication server.

Switches needn't be configured onesy-twosy any longer. Consistency in configuration, visibility, resiliency can be managed for the whole LAN or WAN, all at once. This is possible from the local or cloud-based managers. Software-defined networking--once the stuff of expensive data center switches--is right beneath the surface on many of these devices. The ability to create arbitrary logical network architectures, to safely extend encrypted VLANs from far-away cores and the ability to secure it are available, if you want to use it.

Wireless has advanced remarkably, too. Controller-based and hive (virtual controller) wireless is being supplanted by WiFi networks in which every AP is its own controller. Consider that if every access point has a 2.5 or 5 Gbit/S interface of its own, then centralized operation and switching will to become a liability for larger deployments. In the latest paradigm, AI and central configuration services manage and update the network, but the traffic is bridged onto the LAN at wire speeds by the access point, cooperating with neighbors, but acting on its own behalf.

Three features of WiFi6 are going to make today's wireless networks unrecognizably fast, tomorrow--especially in dense deployments. The first is modulation techniques, particularly QAM-1024, that push wireless into the gigbait+ range with a reasonable number of antenna chains. BSS Coloring greatly increases the efficient use of crowded radio space. OFDMA, which allows the sharing of transmissions between clients, can provide greatly improved transmission-cycle efficiency. If that's not all enough, WiFi6E is out with new spectrum in the 6GHz range, included very wide channels (up to 320 MHz) for huge performance. Look back at some of out earlier communications to learn more about these capabilities.

There is a limit to the number of bitcoins--21 million in total, with about 2 million left to be mined. Presently, there are about 900 new bitcoins per day. As more are found, the hash target becomes increasing harder to hit and it becomes asymptotically harder to find additional bitcoins. It will be the year 2140 before the last one is finally found. There's investment and reward in the effort: Russia just took delivery of a 70 MW crypto mining farm. It is said that almost 65% of the cryptomining resources are in China. Bitcoin broke $50,000 on Tuesday. Elon Musk bought $1.3 billion in bitcoin a couple weeks ago, when it was already over $40,000. Projections are for $500,000 per bitcoin.

There is a limit to the number of bitcoins--21 million in total, with about 2 million left to be mined. Presently, there are about 900 new bitcoins per day. As more are found, the hash target becomes increasing harder to hit and it becomes asymptotically harder to find additional bitcoins. It will be the year 2140 before the last one is finally found. There's investment and reward in the effort: Russia just took delivery of a 70 MW crypto mining farm. It is said that almost 65% of the cryptomining resources are in China. Bitcoin broke $50,000 on Tuesday. Elon Musk bought $1.3 billion in bitcoin a couple weeks ago, when it was already over $40,000. Projections are for $500,000 per bitcoin.